On this article about blocking sockets:

- What is the API and how to use it.

- What makes it blocking, and why that matters.

- Developing an Echo Server as an example.

TLDR

- TCP sockets are the lowest level of IO programming you can do in Java/Scala.

- TCP is implemented by the Operating Systems (OS). The JVM merely allows you to tap into that.

- Blocking sockets will block the calling thread when 1) accepting connections, and 2) reading data from, and 3) writing data to, a connection.

- Blocking sockets require 1 thread per connection.

Introduction

Mastering TCP sockets will change your abilities as a programmer.

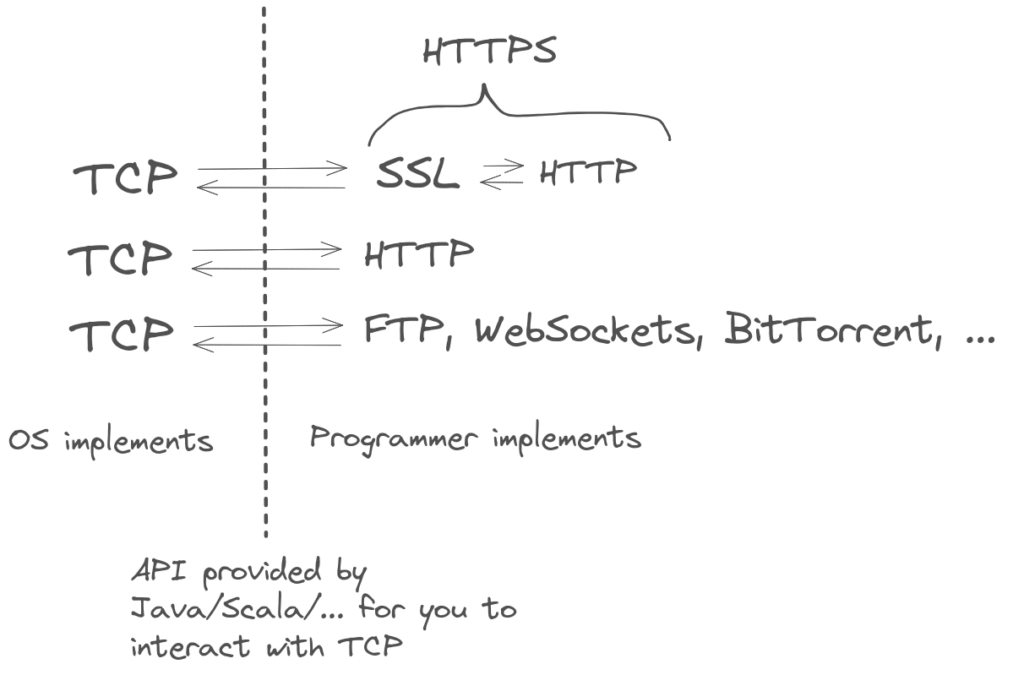

In today’s world, most developers focus on web-apps, where the importance of network I/O is their defining characteristic. Here network IO means TCP, as most apps are essentially some wiring of http calls, websockets, and database access.

Here’s the crux: sockets are the API of TCP that programming languages provide. Therefore, all the network related libraries that you use in your applications, directly or indirectly, such as, http clients, http servers, libraries to connect to databases, kafka libraries, must ultimately rely on TCP sockets. They cannot bypass this, as you cannot go lower than TCP sockets1.

Mastering TCP sockets allows you to understand one level of complexity below that of all the client libraries you use, giving you valuable insights.

Furthermore, sockets have their own particularities and access patterns, which, on apps where networking is dominant, will completely define how they are built. For example, choosing between blocking vs. non-blocking sockets defines the threading model of both http servers and http clients.

1 unless you use Java Native Interface. But that’s not really standard Java.

Let’s make it clearer what we are talking about. TCP is the protocol for reliable information exchange. And sockets is the generic term we use to refer to the classes and objects in Java and Scala (and other languages as well) that allow us to interact with TCP.

The first key thing to understand is that TCP is implemented by Operating Systems, not by applications. Java and most other languages simply provide an API that interfaces with the TCP implementation of the underlying OS.

Note

Terminology

TCP is the protocol for reliable information exchange. In the formal context of this protocol, a socket is the tuple of IP address and port number that uniquely identifies a TCP/IP application. At the same time, socket is also the name of the classes in programming languages (namely Java) allowing the users to interact with the TCP implementation. Such classes represent not only the IP address + port, but the entire connection itself, and the means to use it.

You must recognize from the context which definition the term refers to. On this article, by a socket we normally refer to the second meaning.

Lastly, through the Internet, there are also mentions of raw sockets to mean sockets that are one level below the TCP layer, at the IP layer. These allow you to create you own TCP-like protocols. But such raw sockets are not directly available on the JVM.

There are 3 APIs to use TCP IO in the JVM world:

| Blocking | Oldest way. Has always been available. Key feature: One thread per socket. |

| Non-Blocking | Introduced within NIO API on release 1.4 from 2006. Key feature: One thread for many sockets. |

| Async | Newest way. Introduced with NIO2 API on release 1.7 from 2011. |

These correspond to 3 different sets of sockets and classes.

This categorization is not particular to the JVM world. Many languages have this division because they mirror the capabilities developed by the Operating System. In fact, all this discussion is applicable to other languages and platforms.

Important

Blocking, Non-Blocking, and Asynchronous sockets can be seen as 3 APIs on a programming language for TCP. It should be evident that the two TCP-connected endpoints on distinct hosts within a network are not obligated to exclusively utilize either blocking, non-blocking, or asynchronous APIs. You can have one host using blocking calls, and the other async calls. Taken to the extreme, you can have whatever you want on one end, provided it respects the TCP protocol and has the ability to encode the required bytes onto the network hardware.

The release of non-blocking sockets with NIO had a particularly big impact. Until then, http servers required a JVM thread for each open connection. Because a thread has a cost in memory, you were limited how many connections a single server could open. For example, for a thread stack size of 1 MB, 100K connections would require about 100GB of memory. We can set a much lower value for stack size, but that is limiting as affects all threads and cannot be changed dynamically. This issue is related with the known C10K problem, coined by Dan Kegel, to describe the difficulty of web-servers to handle more than 10k concurrent connections back in around the year 2000.

The study of non-blocking sockets will be left for another article. However, although the API is different, many of the concepts discussed on this article – which focus solely on blocking sockets – are relevant to non-blocking, and asynchronous sockets as well.

Overview

Before we delve into the API details, we must understand TCP at a high level. The contrast from framing TCP and sockets against UDP provides valuable insights.

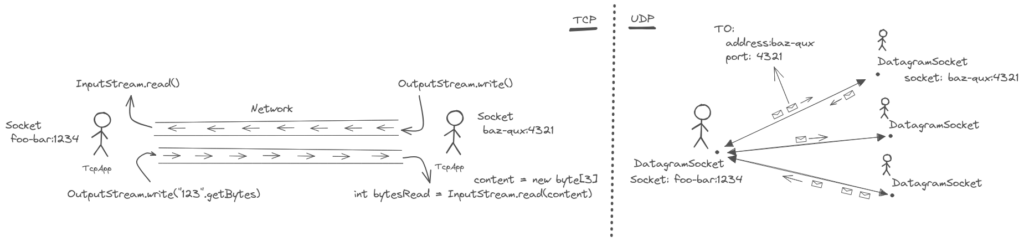

TCP is essentially two streams that abstract the reliable flow of data between two hosts over a network. The stream facade shields the programmer from the complexities required to implement the many features of TCP. TCP sockets are both the means and the API through which one uses TCP in a programming language.

UDP is a much simpler protocol. In fact, it is only a thin layer over the IP protocol. It provides almost no features at all. Certainly no reliability or ordering. Programmers also use UDP sockets (called DatagramSockets ) to interact with UDP. I have discussed UDP on the BitTorrent Tracker protocol article.

A key point to understand is that the features, complexities, and particularities of each of the protocols “leak” onto the respective API and their sockets. For example, a TCP socket is bound to a single remote host. That is not the case for UDP.

- TCP is connection oriented (UDP is not)

A Socket object is associated with a single remote. It can send data only to it, and receive data only from it. It is not possible to send to any other address after the socket is created or bound. A connection implies the management of the state (of the connection), albeit such state is not visible, and managed by the OS.

This contrasts with UDP, stateless, where a single socket object can send to, and receive from distinct remote hosts. In fact, each message sent must contain the socket address of the destination, and each message received contains the socket address of the host from which it came. - TCP is stream based. (UDP is message based)

The abstraction are streams.InputStreamto receive from the remote,OutputStreamto send. Similar to the abstraction for file IO. This means there is a continuous flow of data, and importantly there are no message boundaries. For example, above, sending the string123within a singlewritecall does not guarantee that the receiving end will read on a singlereadcall. It’s perfectly possible for one call to return1, and separate subsequent calls toreadto return the remaining23.

UDP on the other hand works with the concept of messages (called datagrams or packets). Boundaries are preserved. If a host sends123on a single message, the remote end will receive the data in a distinct self-contained message with exactly the same contents. - Other features:

- TCP maintains order. Send

1,2,3for the remote to receive the same sequence. UDP might swap the order of the “messages” sent. - TCP is reliable. What you send is received (unless the socket breaks altogether). UDP might silently drop messages.

- TCP maintains order. Send

API

Regarding blocking sockets, there are 2 main classes to remember: ServerSocket and Socket. That is it.

Well, there are 2 secondary classes InetAddress and SocketAddress – which are also required by the other 2 types of TCP sockets, and also UDP sockets – that embody an IP address and an IP Address plus port number respectively. They are crucial as they uniquely identify TCP applications on a network. A SocketAddress is a telephone number, and Socket is both the telephone and the telephone line.

ServerSocket. Abbreviated.

package java.net;

import java.io.IOException;

public abstract class ServerSocket implements java.io.Closeable {

public ServerSocket() throws IOException {}

public ServerSocket(int port) throws IOException {}

public ServerSocket(int port, int backlog, InetAddress bindAddr) throws IOException {}

public void bind(SocketAddress endpoint) throws IOException {}

// Characterizes ServerSocket. Does not exist on Socket

public Socket accept() throws IOException {}

// Why does this exist you might wonder

public void setReceiveBufferSize(int size) throws SocketException {}

public void setSoTimeout(int timeout) throws SocketException {}

public void close() throws IOException {}

}Socket. Abbreviated.

package java.net;

import java.io.IOException;

public abstract class Socket implements java.io.Closeable {

public Socket() {}

public Socket(InetAddress address, int port) {}

public void bind(SocketAddress bindpoint) throws IOException {}

// Method does not exist on ServerSocket

public void connect(SocketAddress endpoint) throws IOException {}

public InputStream getInputStream() throws IOException {}

public OutputStream getOutputStream() throws IOException {}

public void setReceiveBufferSize(int size) throws SocketException {}

public void setSendBufferSize(int size) throws SocketException {}

public void setSoTimeout(int timeout) throws SocketException {}

public void close() throws IOException {}

}Socket

When two TCP applications interact, one of them must initiate. The app initiating is always a Socket. Naturally, this socket must know the address of the remote. For this, the main constructor of Socket takes in an IP in the form of a InetAddress, and a port number. When that constructor call returns it implies that a TCP connection was successfully established with the remote – at least at the OS level. This makes the call blocking, as there might be many reasons as to why that “interaction” takes a long time.

In contrast, the parameterless constructor returns an unconnected instance. The returned socket is then connected by an explicit call to connect by providing a SocketAddress (which contains both the IP and port). Using this 2-step approach allows you to further control the connection before connecting. Namely, you can call bind(SocketAddress). This specifies what is the local TCP address to be used by the connection. If you don’t specify it – by using the other constructor – then a random local port and IP is used. If this confuses you, refer back to illustration Overview TCP and UDP protocol: on a TCP connection between two hosts, each endpoint is described by two socket addresses: The local address, and the remote address. Obviously, the remote address in one end corresponds to the local address on the other end.

Once the instance is properly connected, you can start receiving data via the input stream and write data via the output stream, which are obtained from the relevant accessor methods. You don’t need to read and write data sequentially. You can receive and write concurrently. For example, you can have two threads, one reading and the other writing.

There are certainly more intricacies regarding its operation. For instance, if the remote host terminates the connection, attempting to read from the input stream will yield -1. Additionally, closing either stream will result in the closure of the socket, and vice versa. For comprehensive information, it is recommended to refer to the Java documentation.

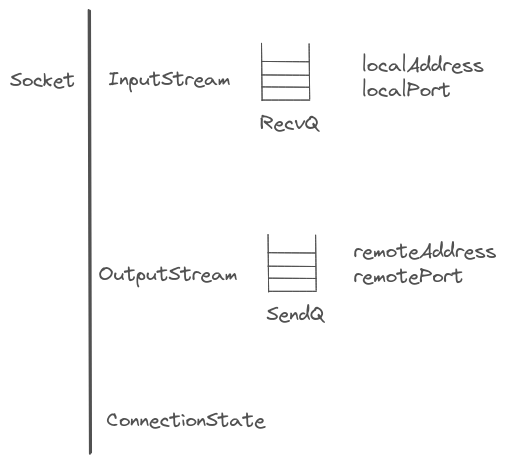

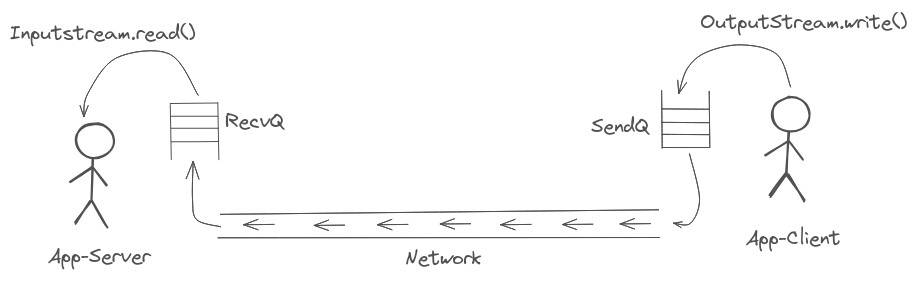

Let us recall that TCP is implemented by the OS. With this, a socket instance is associated with two queues. RecvQ is a buffer where the TCP implementation holds data that it has received from the remote but not yet read by the application. When an app reads from the socket with InputStream.read(), it is consuming from this buffer. Similarly, SendQ is used to hold data that the app is sending to the remote, but that is not yet with the remote. When an app does OutputStream.write(), and the call returns, it does not mean that the remote application received the data, but only that the OS accepted it and wrote it onto the send buffer.

Even though RecvQ and SendQ are managed by the OS, the application is able to set their size in bytes. The values passed on to setReceiveBufferSize() and setSendBufferSize() are normally honored by the OS, but ultimately are merely suggestions. Namely, the OS sets lower and upper bounds.

These queues are extremely important to TCP, as it is how the protocol achieves reliability and performance optimization.

For our perspective, we should have a basic understanding on how they work. As we will see further down, they relate to the blocking nature of write calls.

Server Socket

Whilst Socket initiates, the receiving end, which we call server, must be actively listening – at the IP and port specified. This is done via ServerSocket. As with the client socket, the server socket can bind() to a particular local address. If an IP address is not specified, the system listens on all available IPs2. Naturally, the port to which the socket binds must be known, otherwise the connecting end (the Socket) couldn’t possibly connect.

2 A computer can have many IPs.

Unlike Socket, creating an instance is not sufficient. One must afterwards call accept() which makes the app await for a client to attempt a connection. The calling thread will block until a client connection is available. The crucial thing to notice is that this method returns an instance of Socket. This instance is connected and is how the server will communicate with the client. This new socket behaves as described above, only the creation was different. In other words, the ServerSocket is “only” useful as a way to create valid, connected Socket instances that allow the app to read and write from the remotes which initiated.

Two key aspects are noteworthy here. Firstly, the ServerSocket remains valid after the accept(), and continues to accept further connections. In essence, a single ServerSocket instance is used to enable the connection of multiple clients. Secondly, the spawned socket will share its local IP and port with the original ServerSocket.

Note

The labels server and client reflect the fact that frequently the host listening passively will provide resources or services to many clients which initiate the connection. But this is not invariably so. The only difference is that the client must know the address and port of the server, but not vice-versa.

For example, a 1-to-1 bidirectional conversation via TCP would not have a server in the traditional sense. Another example is the BitTorrent protocol where TCP is used for exchange of data between two hosts which the protocol refers to as peers.

More

Let’s explore further with an example. The snippet below:

- Creates a server socket listening on a particular port.

- Accepts a single TCP connection.

- Prints to the terminal everything the remote app sends.

import java.io.IOException;

import java.io.InputStream;

import java.net.*;

public class TcpServer {

// Imagine the parameters (e.g. localPort) are parsed from input args

public static void main(String[] args) throws IOException {

ServerSocket serverSocket = new ServerSocket();

serverSocket.setReceiveBufferSize(receiveBufferSize);

serverSocket.bind(new InetSocketAddress(localAddress, localPort), backlog);

// blocks the calling thread for indefinite period of time.

Socket socket = serverSocket.accept();

InputStream in = socket.getInputStream();

while (true) {

int mayChar = in.read(); // we are reading one byte at a time

if (mayChar == -1) {

System.out.println("Stream ended. Exiting.");

System.exit(0);

}

System.out.print((char) mayChar);

}

}

}Tip

Copy the snippet to your IDE, specify values 1000, 0, 3081 and 10 for parameters receiveBufferSize, localAddress, localPort, and backlog, and start the app. I am only showing the code for the server. To simulate the client, you can use netcat (available on all OSes). Just fire nc localhost 3081 on another terminal and start typing. The IDE should immediately print what you write.

The value of 0 for the IP sets the app to listen on all available IPs. Which includes the loopback IP localhost.

Above, instead of creating the socket and bidding it in separate statements, we could use the other constructors to bind it during creation.

There is however, a subtle difference. It is a detail, but illustrates the interplay with the OS. With the parameterless constructor, you have a chance to set the read buffer size before binding the socket. The provided size will then be used by all the sockets accepted from the ServerSocket. But why bother? Why not just set the size after the Socket instance is returned? As we will see below, because after the ServerSocket is bound, but before a connection is accepted by the app, a full established TCP connection exists on the OS level, which can already receive data from the remote!

The role of the Operating System

As mentioned before, the TCP implementation is a service provided by the OS. Programming languages merely leverage system calls to interface with this implementation. It is insightful to see what happens to the OS’s TCP structures associated with the connections of our app at various stages. On Linux, enter watch -n 1 'ss -t -a --tcp | grep -P "Recv-Q|3081" on a new terminal. Additionally, you need to edit the snippet to set a Thread.sleep() as required to hold the app in the desired state.

Tip

ss is netstat on Windows and Mac. watch is the same on Mac but you might have to install it. I don’t believe a similar command exists on Windows. Instead, you might have to use a loop on a script, or else issue the subcommand several times manually.

We would see the following output just after the socket bind, but before the accept() :

State Recv-Q Send-Q Local Address:Port Peer Address:Port

LISTEN 0 1 192.168.1.110:3081 *:*Where 192.168.1.110:3081 is the local address we choose. Had we provided 0 for the IP, we would see:

State Recv-Q Send-Q Local Address:Port Peer Address:Port

LISTEN 0 1 *:3081 *:*Meaning the app listens on all available IPs. Furthermore, the value *:* for the peer address makes sense since the server socket can receive connections from any remote source.

After a remote TCP application connects to this address and port, we would start seeing:

State Recv-Q Send-Q Local Address:Port Peer Address:Port

LISTEN 1 1 *:3081 *:*

ESTAB 0 0 192.168.1.110:3081 192.168.1.103:55256The extra socket represents the new connection, but the original socket (of the ServerSocket variety) remains unchanged, and ready to create new sockets.

Note

The IP 192.168.1.103 corresponds to another computer of mine in my home’s private network. If you issue the command from the same host you would see the localhost’s IP: 127.0.0.1

There is a certain disconnect between the data structures of the OS and the corresponding ones the Java application sees. In particular, the establishment of a TCP connection occurring at the OS level does not depend on the application doing ServerSocket.accept(), but only that it is bound.

After the server socket is bound, the OS will listen for, and if a client initiates, will establish a fully fledged TCP connection, including 3-way handshake. The remote client will not know, or care, that the connection hasn’t been yet accepted by the application. In fact, the client can even start sending data through the socket before the server has done ServerSocket.accept(). For example, if we put a thread-sleep right after the bind(), and then a remote client connects AND sends the content Hello World!, we would see the following:

State Recv-Q Send-Q Local Address:Port Peer Address:Port

LISTEN 1 1 *:3081 *:*

ESTAB 13 0 192.168.1.110:3081 192.168.1.103:47730The receive buffer of the connected socket shows 13 bytes, as expected since each ASCII character in UTF-8 encoding takes 1 byte to encode. Clearly, the OS happily received the data. However, because the application hasn’t had a change to accept the connection yet, it is buffered on the RecvQ until the application accepts and reads from it.

This means that accept blocks the calling thread until a new connection is available from the OS, but the reverse is not true. The underlying OS will establish TCP connections for the application even if the program is not currently blocked at accept. In other words, accept asks the OS for the first ready-to-use connection, but the OS does not wait for the application to accept connections in order to establish new ones. It might establish many more.

This is where the backlog parameter on the ServerSocket constructor comes into play. It tries to specify to the OS how many TCP connections it should establish beyond those not yet accepted by the application. Naturally you would want there to be a bound. It is useful when the application is slow accepting connections. For example for the listing above, the application is only ever going to accept one connection, so setting backlog as low as possible would help cap the number of needless connections established by the OS.

Blocking

We have seen that ServerSocket.accept and Socket.connect are blocking operations. They might block the calling thread indefinitely, but said blocking only occurs once. More impactful is the blocking associated with reading and writing on the socket, as that might occur an indefinite amount of times during its lifetime.

Why do these calls block? The simple answer is that it’s just how the API was designed.

Let’s start with reading. In a TCP connection, regardless on whether we are using blocking, non-blocking, or async sockets, the app trying to read from the socket has no control over when the remote host will send data, if ever. This is true at a conceptual level alone. The server simply can’t read what has not been sent. Perhaps in certain scenarios, you can have a tight protocol on top of TCP such that you only attempt to read, when you are fairly certain that the client already sent data. But even in such limited cases, you can’t account for the remote host being under load and therefore slower, or the network being very bad.

Given this, if you were the API designer, at a conceptual level, what options would you have?

The simplest one is to well …. block the thread until data arrives. It certainly seems the easiest to understand. Not least because the code remains sequential from the point of view of the programmer.

Another one would be the programmer passing a function to the API call, and then “the system” makes sure that callback function is applied to the data once it arrives. But how would the API implementation itself “wait” for the data? Very interesting question indeed.

Another option is to design an API such that the programmer can poll the socket to determine if it has data that can be read. Each polling call itself doesn’t block. If there is data available, then you can call a blocking read knowing it will not actually block.

The descriptions naturally correspond to the blocking, async, and non-blocking “types” of sockets. So blocking sockets block because that is one of the ways they could have been designed. The motivation for them was likely that it is a more straightforward mechanism to handle socket I/O. It is easier to understand and work with for programmers, as it allows the program to be sequential. In contrast, the non-blocking version is more complex on the programmer’s side, as he/she has to manage how to poll periodically, and what useful work to do in case there is no data to be read.

Full queues and blocking

Above, we explained the Why reads block. Here we explain both the Why and the How writes block.

Conceptually, writing data to a TCP socket must be a blocking operation if TCP is ever to uphold one of its features: Reliability. If no data is to be lost between sender and receiver, then TCP must ensure that the producer does not produce more data than the receiving end is reading. It logically follows that apart from finite buffering, the only solution is to halt the production.

For example, imagine on the illustration the client is a water tap, the network is a pipe, the RecvQ is a bucket, and the server is a person drinking. If we assume all the water from the tap must be drunk by the person, then laws of physics – conservation of mass – dictate that if the person is not drinking as fast as the tap is pouring, water accumulates on the bucket, and once it fills the tap must be closed, until the person catches up with the drinking.

The closing of the tap on blocking sockets corresponds to the calling thread blocking.

It need not block though. We could imagine an alternative. Similarly to above, the API write method could allow us to poll if writing into the socket would block or not. If it did block, then the thread could go on to do other useful work.

Blocking example – Code and animation

The best way to develop an intuition for blocking is to watch the OS’s RecvQ and SendQ as an application advances and subsequently blocks.

Below we have some code, and a corresponding gif animation. The code corresponds to two applications: The client loops around only sending data to a server, which in turn loops around and only ever prints what it receives. The example is contrived. It is designed so that we can halt both “the sending” of the client, and “the reading” of the server. This is done by pressing Enter on the respective terminal.

Analyse the code and watch the animation until it becomes clear. You cannot see the user (i.e. me) pressing Enter on the terminal directly, but everytime this happens a message is printed onto the window, as evident in the code listings.

The code isn’t particularly elegant. I won’t analyse it in detail. But a few comments are important:

- The client sends rows of a letter between

AtoZ. This was done so that it is easy so spot when that row is read by the server. - Notice that on the client, character

✅is printed after the socket write, so if the write blocks, you won’t see the✅on the terminal output. - Regarding the server, the code powering the animation is a more complex version of the one listed. The original was trimmed for conciseness. The difference is, once the server is halted, the user can specify an amount of bytes to read with pattern

+<digits>. - The ad-hoc thread on both client and server is how we concurrently listen for user input from the keyboard terminal (i.e. me pressing

Enter)

Client app

import java.io.*;

import java.net.*;

import java.nio.charset.StandardCharsets;

import java.util.stream.Collectors;

import java.util.stream.IntStream;

public class SendAtoZClientApp {

static volatile boolean halt = true;

// Imagine the parameters used (e.g. localPort) are parsed from input args

public static void main(String[] args) throws Exception {

Socket socket = new Socket();

socket.bind(new InetSocketAddress(localAddress, localPort));

socket.setSendBufferSize(sendBufferSize);

socket.connect(new InetSocketAddress(remoteAddress, remotePort));

OutputStreamWriter out =

new OutputStreamWriter(socket.getOutputStream(), StandardCharsets.UTF_8);

// Concurrent thread listens for input from the user that controls the behaviour.

// On each Enter the app either halts or resumes reading from the socket.

new Thread(

() -> {

while (true) {

System.in.read(); // Needs a try/catch. Ignored for conciseness.

if (halt) {

System.out.println("Continuing ...");

halt = false;

} else {

System.out.println("Stopping ...");

halt = true;

}

}

})

.start();

char[] f =

IntStream.rangeClosed('A', 'Z')

.mapToObj(c -> "" + (char) c)

.collect(Collectors.joining())

.toCharArray();

for (int i = 0; ; ++i) {

if (!halt) {

char charIteration = f[i % f.length];

String toSend = String.valueOf(charIteration).repeat(35);

System.out.print("Sending: " + toSend);

out.write(toSend + "\n");

out.flush();

System.out.println(" ✅");

}

Thread.sleep(sleepInterval); // There are better ways, but we need conciseness

}

}

}Server app

import java.io.*;

import java.net.*;

import java.nio.charset.StandardCharsets;

import java.util.stream.Collectors;

import java.util.stream.IntStream;

public class PrintReceivedServerApp {

static volatile boolean halt = true;

// Imagine the parameters used (e.g. localPort) are parsed from input args

public static void main(String[] args) throws Exception {

ServerSocket serverSocket = new ServerSocket(localPort, backlog, localAddress);

serverSocket.setReceiveBufferSize(receiveBufferSize);

Socket socket = serverSocket.accept();

socket.setReceiveBufferSize(receiveBufferSize);

InputStream inputStream = socket.getInputStream();

// Concurrent thread listens for input from the user that controls the behaviour.

// On each Enter the app either halts or resumes reading from the socket.

new Thread(

() -> {

while (true) {

System.in.read(); // Needs a try/catch. Ignored for conciseness.

if (halt) {

halt = false;

System.out.println("Continuing ...");

} else {

halt = true;

System.out.println("Stopping ...");

}

}

})

.start();

while (true) {

if (halt) {

Thread.sleep(100); // There are better ways, but we need conciseness

} else {

int mayChar = inputStream.read();

if (mayChar == -1) {

System.out.println("Stream ended. -1 received from input stream. Exiting.");

System.exit(0);

}

System.out.print((char) mayChar);

}

}

}

}On the animation, the right panel corresponds to the terminal for the client, and the left panel the server’s.

Most importantly, the bottom pane shows the TCP structures for the TCP connections. As before this is done with netstat. We see two ESTAB connections because, unlike the examples on the section before, the client app is running on the same host, and not on a different computer. The second socket corresponds to the server. It can be identified because it has the same port as the listening ServerSocket: 3081. The last is the established socket of the tcp-client. Its local port 48427 was randomly assigned as we didn’t specify it.

The server doesn’t send data, and the client doesn’t read. So, the buffers that matter are RecvQ of the server, and the SendQ of the client. When these fill up, the client blocks!

As for the sizes of these queues, they are set explicitly to only a few bytes. The OS has a minimum cap and disregards such low value. The actual size briefly appears on the terminal, if you are swift enough to notice at the beginning.

The terminal recording shows 3 things:

- When the receiving end consumes fast enough, the queues are empty.

- When the client stops sending data, obviously the server side can’t print anything.

- Most importantly, when the server does not read from the socket, the client can proceed and the data is first buffered in the Recv-Q of the server. When this fills up, the data is buffered on Send-Q of the client. In both scenarios, as these queues are managed by the OS, the Java application is unaffected and the client keeps writing successfully. When the Send-Q finally fills up, the

OutputStream.write()blocks the calling thread. That call completes only when there is available space on the queue again. - As expected, once halted, when we instruct the client to read a specific amount of bytes, an equal number of bytes is freed from the

RecvQ. In other words, reads from the Java application consume from the RecvQ of the underlying TCP implementation.

Interestingly, notice that when both buffers are full, the client does not resume after the server consumes a minimum amount of data.

Threads and blocking

To illustrate the role played, and necessity of threads when using blocking sockets, let us develop a server application that sends back to the remote whatever data it receives. The simplicity of such Echo Server allow us to focus on the fundamentals. We will ignore developing a corresponding “echo client” side. If you are trying this locally, you can once more use netcat. On Linux typing nc <ip> <port> on the terminal establishes a connection to the server, with input and output displayed on the screen.

The first implementation One Connection is incredibly simple. There is a single thread, which accepts a connection, and then loops around indefinitely, only exiting when the client terminates – potentially never. On each iteration, it listens for data, blocking if needed and then writes it straight back into the client. The read operation reads up to the buffer size worth of data. Importantly, the read does not block until the entire buffer is filled. It can return when as little as 1 byte has been copied from the RecvQ, regardless of the buffer size. The client sending data sees no latency. It “hears the echo” immediately.

One Connection

import java.net.*;

public class OneConnectionServer {

// For brevity and clarity, I avoid proper error handling.

// Imagine localPort is parsed from args.

public static void main(String[] args) throws Exception {

byte[] buffer = new byte[1024];

ServerSocket serverSocket = new ServerSocket(localPort);

Socket socket = serverSocket.accept();

while (true) {

int bytesRead = socket.getInputStream().read(buffer);

if (bytesRead == -1) return;

socket.getOutputStream().write(buffer, 0, bytesRead);

}

}

}Dummy Improvement

import java.net.*;

import java.util.ArrayList;

import java.util.List;

public class DummyImprovement {

// For brevity and clarity, I avoid proper error handling.

// Imagine localPort is parsed from args.

public static void main(String[] args) throws Exception {

byte[] buffer = new byte[1024];

ServerSocket serverSocket = new ServerSocket(localPort);

List<Socket> connections = new ArrayList<>();

int connectionIndex = 0;

while (true) {

Socket newSocket = serverSocket.accept();

connections.add(newSocket);

Socket socket = connections.get(connectionIndex);

int bytesRead = socket.getInputStream().read(buffer);

// The code snippet is merely illustrative and has issues,

// below if a client disconnects, the entire server shuts down.

if (bytesRead == -1) return -1;

socket.getOutputStream().write(buffer, 0, bytesRead);

if (connectionIndex == connections.size() - 1) connectionIndex = 0;

else connectionIndex = connectionIndex + 1;

}

}

}SetSoTimeout

import java.net.*;

import java.util.ArrayList;

import java.util.List;

public class OneConnectionServer {

private int nextIndex(int current, int size) {

if (current == size - 1) return 0;

else return current + 1;

}

public static void main(String[] args) throws Exception {

ServerSocket serverSocket = new ServerSocket();

serverSocket.setReceiveBufferSize(receiveBufferSize);

serverSocket.bind(new InetSocketAddress(localAddress, localPort), backlog);

List<Socket> connections = new ArrayList();

byte[] buffer = new byte[1024];

int connectionIndex = 0;

while (true) {

Socket socket;

try {

// In the special case there are no connections, we actually want to block

if (connections.isEmpty()) serverSocket.setSoTimeout(0);

socket = serverSocket.accept();

socket.setReceiveBufferSize(receiveBufferSize);

socket.setSendBufferSize(sendBufferSize);

socket.setSoTimeout(readTimeout);

serverSocket.setSoTimeout(acceptTimeout);

} catch (Exception e) {

socket = null;

}

if (socket != null) {

connections.add(socket);

System.out.println("Socket added ...");

}

if (connections.isEmpty()) break;

// Select the socket to read from on this iteration

Socket s = connections.get(connectionIndex);

// If the socket has no data available, reading would block. Instead,

// update the index and move to the next iteration.

//Unfortunately there is a flaw with this method. Read below.

if (s.getInputStream().available() <= 0) {

connectionIndex = nextIndex(connectionIndex, connections.size());

continue;

}

int numBytesRead;

try {

numBytesRead = s.getInputStream().read(buffer);

} catch (SocketTimeoutException e) {

connectionIndex = nextIndex(connectionIndex, connections.size());

continue;

}

if (numBytesRead == -1) {

System.out.printf("Removing %s from socket list ...\n", s.getRemoteSocketAddress());

connections.remove(s);

connectionIndex = Math.min(connectionIndex, connections.size() - 1);

continue;

}

s.getOutputStream().write(buffer, 0, numBytesRead);

connectionIndex = nextIndex(connectionIndex, connections.size());

}

}

}This implementation has an obvious flaw. It is only capable of serving a single client. There is only a single thread, and after accepting the first connection, the code moves onto the infinite loop. Subsequent clients might be able to establish a connection with the OS, depending on the backlog, but our app will never process them.

How can we serve many clients?

Serving many clients is easy. Just insert an additional outer loop on line 9, just before the serverSocket.accept(). The result would be an app that serves clients sequentially. A client would have to close the connection for the inner to loop to break and another client to be served. This approach might suffice if the service to be provided is “very quick”. Imagine a server that provides the current time. Maybe with a large backlog it would be feasible. For anything else, it is unacceptable. What we need is to serve multiple clients concurrently.

Instead, we could try to move the accept onto the single loop. Each iteration would 1) accept a new connection, 2) inserting it onto the end of a list, and 3) read and write from a connection of the list. This is done on tab Dummy Improvement.

This would not work. The issue is again that the API is blocking. accept, read, and write might block indefinitely, therefore preventing a new iteration. The app will neither accept new connections nor serve existing ones in a timely manner. Imagine we accept the first connection and echo the 1024 bytes for the client. On the second loop iteration we would block again at accept, and be dependent on a new client connecting for the app to serve the first client.

The code could become more sophisticated. We could move accepting new connections to a separate thread, but continue reading and writing across all connections onto the main thread. But read and write can still block. If a particular client pauses sending data, once we attempt to read from its socket, the thread blocks, all the remaining clients would stop hearing their echo.

The problem is the blocking nature of the API. What we would want, is the semantics to be something like:

Socket newSocket = Socket.accept()

Accept and return a new connection if available. If not available, return immediately a null value.

int bytesRead = Socket.getInputStream.read(buffer)

If data is available read onto the buffer. Otherwise, return immediately 0.

Socket.getInputStream.write(buffer)

If the write buffer of the underlying TCP implementation is not full, and has at least as much space free as the buffer size to be written, write the data. Otherwise, immediately thrown an exception (return false).

Semantics similar to these are indeed available. It is what non-blocking Sockets are all about.

For “standard” sockets, the solution is generally to use multiple threads as discussed further down. There is however a way to improve on this short-comings using setSoTimeout(). You see, it is not completely accurate to say blocking sockets might block indefinitely on accept and read. It is possible to configure timeouts for these operations.

ServerSocket.setSoTimeout(<timeOutMillis>) configures the server socket to wait only the predefined amount of time for a new connection from the OS. After that it throws a java.net.SocketTimeoutException.

Similarly, Socket.setSoTimeout(<timeoutMillis>) sets the maximum amount of time for reads on the input-stream of the socket to wait for any bytes. After that, the same exception is thrown.

In both cases, the timeout must be set before the sockets enter the blocking call. Even better still, the input-stream has a method available(), that gives an estimated amount of bytes that can be read without blocking. With these features, we are better equipped to design a single-threaded echo server. The listing on setSoTimeout tab is a considerable improvement. It serves many clients concurrently in a timely manner. But there are still flaws:

- On each iteration we block on the server socket for

acceptTimeout. So in the worst case, the last connection to be served has to waitacceptTimeoutXnumber of connections. ReducingacceptTimeoutcan only go to 1 millisecond, and probably we would need to increasebacklog, or risk missing connections in certain scenarios.

A better approach is to have another thread dealing with theServerSocket.accept(). - The

available()check on the input stream returns0both when there are no bytes to read, and also when end of stream is detected – which occurs when a client disconnects. There is no apparent way to distinguish between the scenarios. This means the code listing above will not be able to remove the sockets of the clients that have disconnected. To address this, we could instead rely onSocket.setSoTimeout(<timeoutMillis>). This would have the same disadvantage as point 1. Better is to mix the two approaches. Ifavailable()for a socket returns 0 for a long time, do a read with a small timeout to check if-1is returned and remove the socket.

This approach mitigates the effects of blocking. But ultimately, if we don’t want to block, then we must use the NIO API, because there is a last piece of the puzzle which cannot be solved. It is the writes!

It is not possible to set a timeout for socket writes. Writes will block if both buffers are full. Given this, we can completely halt the server above, such that it cannot serve any client. This will happen if a single client stops reading, but continues writing. In that case, the echo server would echo the writes, which would end up filling the RecvQ of the client. Once full, SendQ of the server would fill-up to. At that point, the write() on the server’s socket would block, and the server could not move onto the next iteration and serve any other client.

Note

If you are using netsat as a client as you try to make the echo server unusable by stopping the client reading from the socket, it might prove difficult to do this. Instead, you can use the client app from the animation example.

To conclude, we are left with the choice blocking servers take. This was the approach before the NIO API appeared: Multi-threading, and in particular one or more threads per connection. The gist is, one thread loops around accepting connections, and upon each new connection we spawn a new thread to process it. The end result is as many threads as there are active connections.

With this approach we achieve an echo server that servers many clients in a timely manner.

Multi-Threaded Echo Server

import java.io.IOException;

import java.net.*;

public class MultiThreadedServer {

private static class Connection extends Thread {

private final Socket socket;

private final byte[] buffer = new byte[1024];

private Connection(Socket socket) {

this.socket = socket;

}

@Override

public void run() {

try {

while (true) {

int bytesRead = socket.getInputStream().read(this.buffer);

if (bytesRead == -1) return;

socket.getOutputStream().write(this.buffer, 0, bytesRead);

}

} catch (Exception e) {

System.out.printf("Error for socket %s finished \n.", socket.getRemoteSocketAddress());

} finally {

try {

socket.close();

} catch (IOException e) {

}

}

}

}

public static void main(String[] args) {

ServerSocket serverSocket = new ServerSocket(localPort);

while (true) {

Socket socket = serverSocket.accept();

// From this moment onwards, the code is concurrent!!

// Starting a thread per connection. The thread exits once the client disconnects.

// Alternatively, we could use a thread-pool to manage the threads.

new Connection(socket).start();

}

}

}Having multiple threads comes with two main problems.

On the one hand, threads are relatively expensive. As mentioned earlier, each must have a stack. Creating and deleting them comes with overhead as well. For highly performant applications handling thousands of connections, this becomes an issue, as mentioned earlier. An improvement over the listing above would be to use a thread-pool, rather than creating a thread explicitly for each new connections. The thread-pool could then reuse threads, and limit some of the overhead of creating/deleting threads ad-hoc.

On the other hand, having multiple threads becomes orders of magnitude more difficult in stateful applications. In our echo server, connections are stateless and completely independent of one another. This is incredibly simple. In the real world, our server application would manage some state, which would be influenced by each client connection. A big problem would then arise. As there are multiple threads reading and writing to shared state, we would need mechanisms to make sure we synchronize access correctly. This is indeed the central problem in concurrency. A simple example of such an app is a chat-server. Each client, and therefore each thread, would want to read the latest messages and also submit new messages.

Important

Synchronizing access to shared state is the central problem in concurrency.

Conclusion

- TCP is implemented by the Operating System where the JVM runs.

- The Socket APIs provided by Java/Scala are a facade to use that TCP implementation.

- TCP has certain characteristic, such as 1) being connection based, and 2) being stream-based, that are reflected onto any API and its semantics.

- There are 3 independent types of such APIs: blocking, non-blocking, and async.

- Blocking APIs block the calling thread on the main operations:

accept,read, andwrite. - Because of its blocking nature, applications normally use one or more threads per connection. This adds an overhead that becomes important for applications handling many connections.

- Using timeouts on

accept, andreadare an alternative to having 1-thread-per connection. Ultimately, this is not sufficient, particularly because there is no such timeout forwrite. - Non-blocking sockets are the solution.